Hybrid Kubernetes in production pt. 1

Over the years we talked with many other public sector companies about their experiences in running containers in production. One of the biggest challenges that we hear again and again is the challenge of running hybridized workloads, or how to have some workloads running on-premise and some in the the cloud in a good way.

In this newsletter-series we will share some of our experiences solving this issue by running Anthos on VMWare (or GKE on-prem, if you prefer) tied together to the cloud in Kartverket using hybrid mesh. We will also discuss the reasons we went with Anthos and pros and cons we have experienced so far.

At Kartverket we have an ambition to adopt cloud native technologies. There's thousands of ways to do this, and after trialing a couple of alternative solutions, including running plain Kubernetes and VMWare Tanzu, we decided to go with Anthos. Anthos is a platform that allows us to run Kubernetes clusters on-premise and in the cloud, and manage them from a single pane of glass. We have been running Anthos in production for a while now, at least long enough to be able to share our thoughts.

This newsletter is the first of three part series about Anthos in Kartverket.

- Why we chose Anthos (You are here!)

- How we run Anthos

- Benefits and what we would have done differently

So why a hybrid cloud?

Were you to take the time machine back a few years, you would see Kartverket as a traditional enterprise with a lot of knowledge and experience in running on-premise workloads. This knowledge served us well, but also slightly held us back in terms of our imagination. We knew that there had to be a better way, but our enterprise was simply not mature enough to adopt a pure cloud strategy. The fear of the unknown cloud weighed heavily on many people, and therefore few people wanted to take the risk of moving to the cloud.

This is something we've worked on for a long time, and still are. After a long time of working with the stakeholders in the organization, we eventually built a cloud strategy, which in simple terms stated that we would prefer SaaS-products over hosting things ourselves, and that we would gradually move our workloads to the cloud.

This cloud strategy however, which cleared up a lot of blockers, came too late for us on SKIP. At that point we had already done most of the work on our on-premise platform, building on the assumptions the organization held at the time, which was that we met our needs through existing infrastructure and that using public cloud had disqualifying cost and compliance implications. For SKIP it was therefore full steam ahead, building the on-prem part first, then adding the hybrid and cloud part later.

It's not like we would have ended up with a pure cloud setup in any case, though. If you're at all familiar with large enterprises, you will know that they are often very complex. This is also true for Kartverket, where we have a lot of existing systems that are not easy to move to the cloud. We also have a lot of systems that are not suitable for the cloud, mostly because they are designed to run in a way that would not be cost effective in the cloud. In addition we have absolutely massive datasets (petabyte-scale) that would be very expensive to move to the cloud.

Because of these limitations, a pure cloud strategy is not considered to be a good fit for us.

A hybrid cloud, however, can give us the scalability and flexibility of the cloud, while still allowing us to run some of our systems on-prem, with the experience being more or less seamless for the developers.

Why we chose Anthos

After some disastrous issues with our previous hybrid cloud PoC (that's a whole story in itself) we decided to to look at what alternatives existed on the market. We considered various options, but eventually decided to run a PoC on Anthos. This was based on a series of conditions at the time, to name a few:

- We had a decent pool of knowledge in GCP compared to AWS and Azure at the time

- Some very well established platform teams in the public sector were also using GCP, which meant it would be easier to share work and learnings

- Anthos and GCP seemed to offer a good developer experience, which for us as a platform team is of paramount importance

- A provider like Google is well established in the cloud space (especially Kubernetes), and would have a fully featured, stable and user friendly product

SKIP ran the Anthos PoC over a few months, initially as an on-prem offering only. Drawing on the knowledge of internal network and infrastructure engineers, this took us all the way from provisioning clusters and networking, to iterating on tools and docs and finally onboarding an internal product team on the platform. Once we felt we had learned what we could from the PoC, we gathered thoughts from the product team, infrastructure team and of course the SKIP platform team.

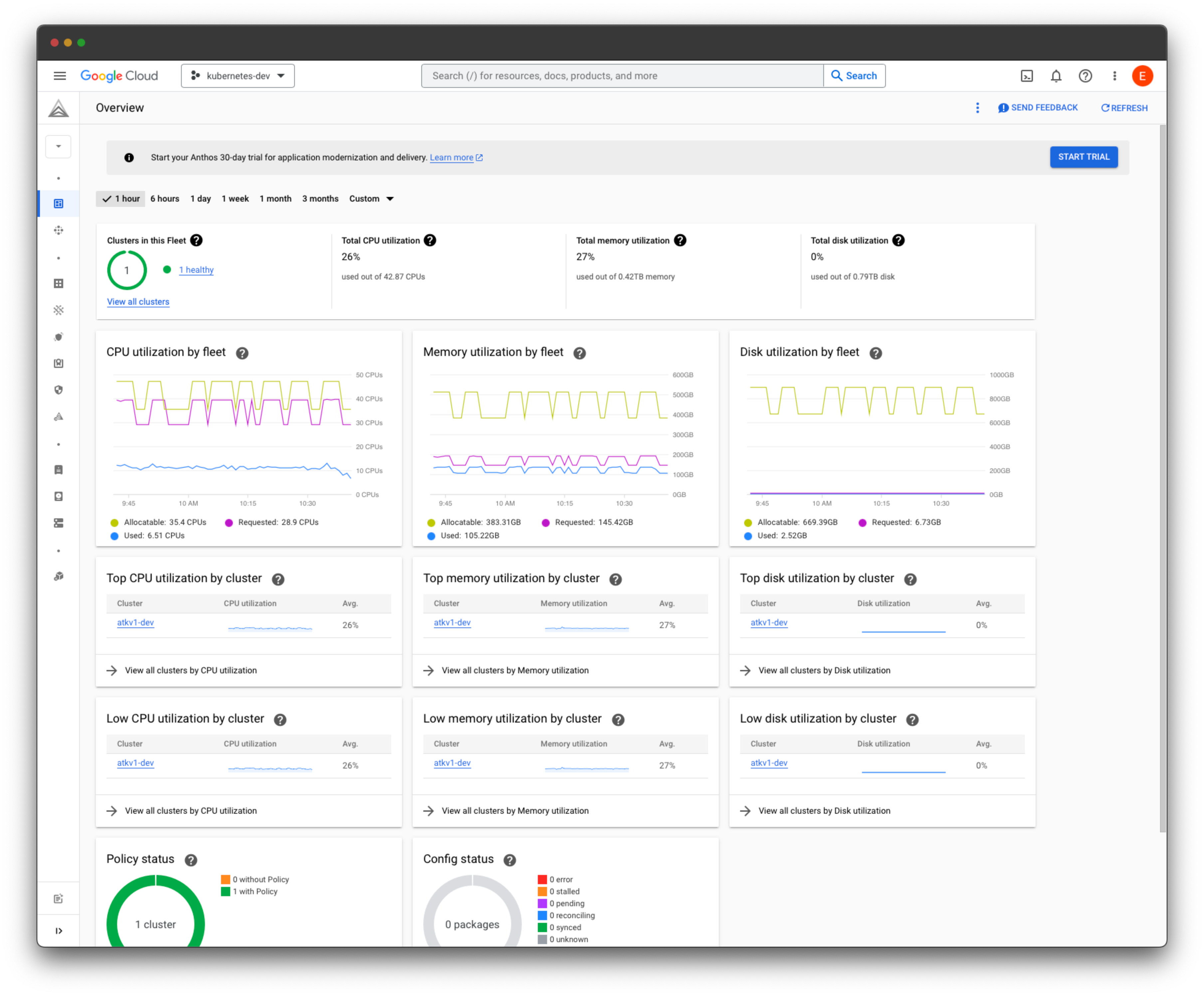

The results were unanimous. All the participants lauded the GCP user interfaces that allowed visibility into running workloads, as well as the new self-service features that came with it. Infrastructure engineers complimented the installation scripts and documentation, which would make it easier to keep the clusters up to date.

Based on the total package we therefore decided to move ahead with Anthos. To infinity and beyond! 🚀

What is Anthos anyway?

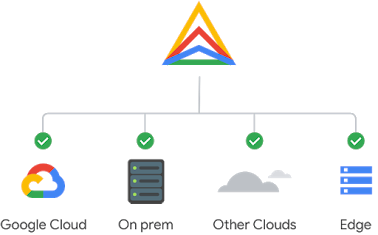

Anthos is Google's solution to multicloud. It's a product portfolio where the main product is GKE (Google Kubernetes Engine) on-premise. Using GKE on-prem you can run Kubernetes clusters on-premise and manage them from the same control plane in Google Cloud, as if they were proper cloud clusters.

In fact, Anthos is truly multi-cloud. That means you can deploy Anthos clusters to GKE and on-prem, but also AWS and Azure. On other cloud platforms it uses the provider's Kubernetes distribution like AKS, but you can still manage it from GKE alongside your other clusters.

In addition to GKE, the toolbox includes:

Anthos Service Mesh (ASM)

A networking solution based on Istio. This is sort of the backbone of the hybrid features of Anthos, as provided you've configured a hybrid mesh it allows applications deployed to the cloud to communicate with on-premise workloads automatically and without manual steps like opening firewalls.

All traffic that flows between microservices on the mesh is also automatically encrypted with mTLS.

Anthos Config Managment (ACM)

A way to sync git repos into a running cluster. Think GitOps here. Build a repo containing all your Kubernetes manifests and sync them into your cluster, making cluster maintenance easier.

ACM also includes a policy controller based on Open Policy Agent Gatekeeper (OPA) which allows platform developers to build guardrails into developers' workflows using policies like "don't allow containers to run as root".

Anthos Connect Gateway

The connect gateway allows developers to log on to the cluster using gcloud

and kubectl commands, despite the cluster potentially being behind a

firewall. From a user experience standpoint this is quite useful, as devs

will be logged in to GCP using two factor authentication, and the same strong

authentication allows you to access kubernetes on-premise.

Connect Gateway also integrates with GCP groups, enabling RBAC in Kubernetes to be assigned to groups instead of manually administered lists of users.

Currently the connect gateway only supports stateless requests, for example

kubectl get pods or kubectl logs (including -f). It does not support

port-forward, exec or run, which can be a bit annoying.

Summary

As you can see, the above tools gives us a lot of benefits.

- Combined with the power of Google Cloud and Terraform, they give us a good combination of flexibility through cloud services

- Ease the maintenance by using the tools that Anthos and Terraform supply us

- Eases the compliance and modernization burden by allowing a gradual or partial migration to cloud, allowing parts to remain on-premise while still retaining most of the modern tooling of the cloud

That's it for now! 🙂 We'll be back with more details on how we run Anthos as well as the pros and cons we've seen so far in the coming weeks. Stay tuned!

Disclaimer - Google, GKE and Anthos are trademarks of Google LLC and this website is not endorsed by or affiliated with Google in any way.